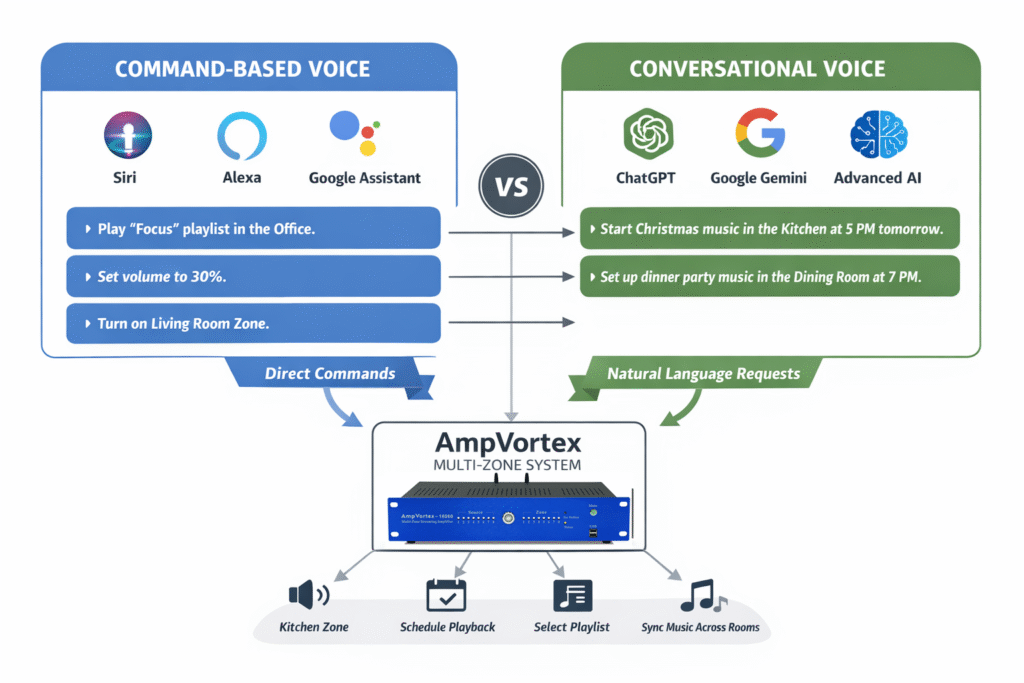

In the world of smart homes and multi-room audio, voice control is one of the most intuitive ways to interact with devices. However, not all voice interactions are the same. Two primary paradigms exist: command-based (imperative) voice and conversational (declarative) voice. Understanding their differences, connections, and applications is crucial for maximizing the capabilities of advanced multi-room streaming amplifiers like AmpVortex.

-

Defining the Two Paradigms

Command-Based Voice:

Users give explicit, direct commands such as “Turn on the living room lights,” “Play jazz in the kitchen,” or “Increase volume by 10%.” These are precise and deterministic, mapping directly to device actions.

Conversational/Declarative Voice:

Users express intentions or requests in a natural, contextual way, for example: “I’m hosting a party tonight; start upbeat music in the living room at 7 PM,” or “Play something relaxing in the bedroom.” This requires AI understanding context, planning, and executing multiple steps.

-

Comparison Table

| Aspect | Command-Based | Conversational / Declarative |

| Precision | High—maps directly to specific actions | Medium—requires interpretation and reasoning |

| Complexity of Tasks | Simple or sequential | Multi-step, contextual, time-based |

| User Effort | Low cognitive load but rigid | Higher cognitive load but flexible |

| Error Handling | Easy to debug—exact action requested | Harder—AI may misinterpret context |

| Integration Potential | Works with almost any smart device | Requires advanced AI, contextual awareness |

| Typical Examples | Siri, Alexa, Google Assistant short commands | ChatGPT, Google Gemini, AI assistants handling multi-step instructions |

-

Connections Between the Paradigms

Command-based actions act as atomic building blocks, while conversational voice orchestrates these blocks into multi-step workflows. For instance, a request like:

“Prepare background music for dinner in the dining room at 7 PM”

can internally trigger several command-based actions:

- Select zone (dining room)

- Choose playlist or streaming source

- Set volume and crossfade

- Schedule playback

This combination allows precision + flexibility.

-

AmpVortex Case Study

AmpVortex multi-zone streaming amplifiers can leverage both paradigms:

- Command-Based Integration:

Short, precise commands control zones, sources, and volume. Example:

“Play Spotify playlist ‘Focus’ in the office.”

- Conversational Integration:

AI interprets multi-step, contextual requests and executes them across zones. Example:

“Tomorrow is Christmas Eve; start 10 Christmas songs in the kitchen at 5 PM.”

This involves scheduling, zone selection, source choice (Apple Music, Spotify, or Qobuz), and synchronized playback, which a command-based system alone cannot handle efficiently.

-

Future Outlook

The trend is increasingly toward conversational voice, driven by AI:

- Personalization: Understanding individual preferences, moods, and routines.

- Cross-Device Orchestration: Multi-zone systems like AmpVortex coordinating music, lighting, and other smart devices.

- Proactive Assistance: AI may anticipate needs, e.g., “It’s 5 PM, your evening playlist is ready in the living room.”

Yet, command-based voice remains essential for immediate control, reliability, and error recovery.

-

Recommendations for AmpVortex Users

- Hybrid Approach:

Use command-based voice for immediate, precise control and conversational voice for complex, scheduled, or contextual automation. - AI Bridges:

Integrate AmpVortex with ChatGPT, Google Gemini, or other AI platforms to translate natural language into zone-specific commands. - Contextual Awareness:

Store playlists, routines, and user preferences to ensure smooth multi-zone orchestration. - Future-Proofing:

Prepare the system for both explicit commands and nuanced conversational instructions.

Conclusion

Command-based voice ensures precision and reliability, while conversational voice unlocks creativity, flexibility, and orchestration potential. For AmpVortex users, the optimal strategy is hybrid: maintain command precision for immediate tasks, and leverage AI-driven conversational voice for multi-zone, context-aware experiences. As AI technology evolves, these paradigms will converge, making smart audio systems both responsive and anticipatory.