The Symbiosis of AI, Agents, and Music: How Large Language Models and AmpVortex Are Shaping Sound

AI Meets Music

In recent years, artificial intelligence has transformed from a niche technology into a pervasive force reshaping creative industries. Among these, music—long a human domain defined by emotion, intuition, and cultural context—has begun to encounter the profound influence of AI, particularly through large language models (LLMs) and intelligent agents.

At first glance, music and LLMs may seem unrelated. Yet, language models are fundamentally about patterns, sequences, and predictive structures—qualities mirrored in music. Harmonies, rhythms, and melodies are sequences of auditory information, much like sentences in a paragraph. By leveraging LLMs, AI can analyze, generate, and interact with music in ways that were once the sole province of human composers.

Enter AmpVortex

This is where AmpVortex comes into play. As a cutting-edge multi-room streaming amplifier, AmpVortex integrates seamlessly with AI-driven music systems. Whether it’s powered by intelligent agents that adapt playlists in real time or by LLMs that generate dynamic compositions, AmpVortex delivers high-fidelity sound to every room. Imagine composing with AI assistance while hearing your ideas immediately in perfect spatial audio, or hosting a live performance where AmpVortex adapts the acoustics and volume for each space automatically.

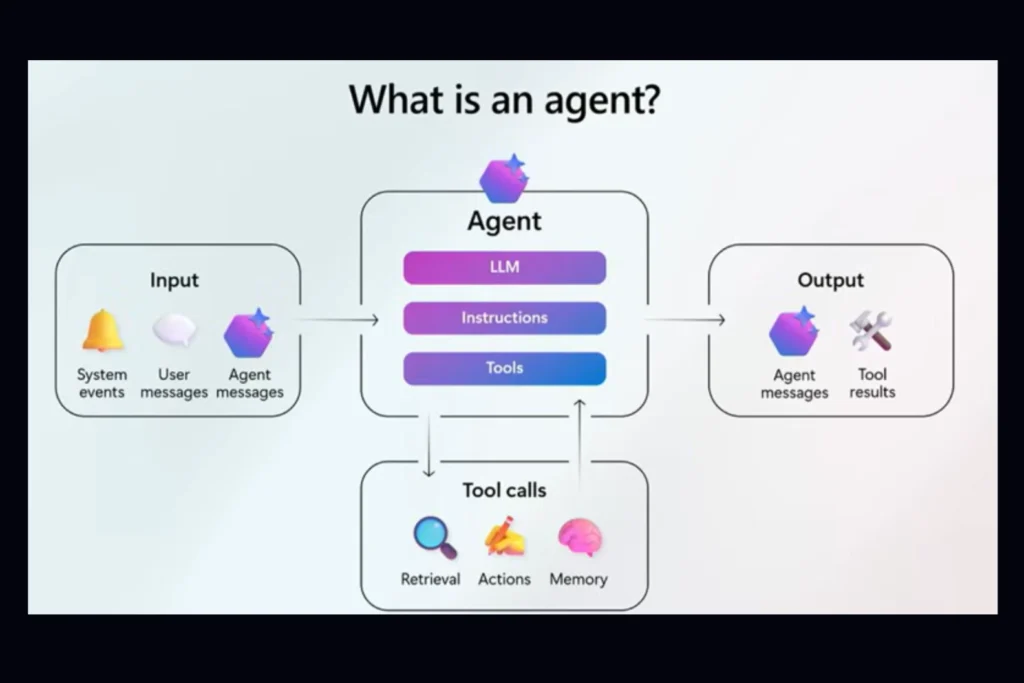

Intelligent Agents as Collaborators

Intelligent agents, powered by AI and connected via systems like AmpVortex, extend capabilities beyond passive generation. They can listen, learn, and respond dynamically. Virtual bandmates, adaptive DJs, or AI-enhanced practice sessions are no longer theoretical—they become tangible experiences when paired with hardware that can faithfully reproduce every nuance.

Transforming Composition, Performance, and Education

Applications span composition, performance, and education. LLM-driven systems can suggest chord progressions or lyrics tailored to your style, while AmpVortex ensures that every note reaches its full sonic potential. AI agents can interact with live musicians, creating evolving performances that respond to both the audience and the performer. Even music education benefits: students can practice with virtual ensembles powered by AI, experiencing realistic audio playback across multiple rooms thanks to AmpVortex.

Challenges and Questions

Yet, this fusion of AI, music, and intelligent audio systems raises important questions. How do we preserve human expression when machines can create indistinguishable music? What ethical frameworks govern AI-generated compositions? AmpVortex doesn’t answer these questions directly, but it provides the platform where such explorations—human and AI in collaboration—can take place.

The Future of Music is Hybrid

Ultimately, the convergence of large language models, AI agents, and AmpVortex represents a new frontier in musical creativity. It’s a hybrid ecosystem where technology enhances human ingenuity, transforming composition, performance, and listening into intelligent, adaptive experiences. Music is no longer only human—it’s collaborative, interactive, and powered by intelligent sound systems like AmpVortex.