The Epic of Light: A Deep Dive into the Evolution of HDR

Subtitle: From 19th-Century Darkrooms to the Nanosecond Precision of AmpVortex 16-Series.

In the world of high-end home cinema, “Resolution” has reached its plateau. We’ve moved beyond the pixel wars. Today, the true frontier is Dynamic Range. HDR (High Dynamic Range) is the art and science of preserving the “truth of light”—from the blinding glint of a supernova to the subtle textures in a charcoal shadow.

But the journey to perfect HDR was not a straight line. It is a 170-year saga of human ingenuity overcoming physical limitations.

1. The Pre-Digital Foundations: The “Darkroom Alchemists” (1850s – 1980s)

Long before silicon chips, photographers grappled with the Luminance Gap. The human eye can perceive a dynamic range of nearly 20 stops, but early film could barely capture five.

- The Splicing Pioneer (1850s): Gustave Le Gray realized that a single exposure could either capture the clouds (overexposing the sea) or the waves (underexposing the sky). He pioneered Combination Printing, using two separate negatives for the sky and the sea. This was the birth of Multi-Exposure Synthesis, the grandfather of modern HDR algorithms.

- The Zone System (1940s): Ansel Adams and Fred Archer revolutionized photography by creating a rigorous framework for pre-visualizing luminance. By dividing a scene into 11 zones (from Zone 0/Black to Zone X/White), they taught us how to compress a massive natural range into the limited latitude of paper and ink. This “Dynamic Mapping” is exactly what your AVR does today when it manages tone-mapping metadata.

2. The Digital Renaissance: From CGI to the Pocket (1990s – 2010s)

As movies moved into the digital realm, the industry faced a crisis: digital sensors were “linear,” but human vision is “logarithmic.” We needed a new math.

- The Debevec Breakthrough: In 1997, Paul Debevec presented a seminal paper at SIGMA, demonstrating how to recover high-fidelity radiance maps from standard photos. This allowed Hollywood to use 32-bit floating-point files for CGI, ensuring that digital explosions felt as “heavy” as real ones.

- The Mobile Democratization: By the time the iPhone 4 launched in 2010, HDR became a household buzzword. However, this was “Computational HDR”—a software trick that merged frames to create a balanced image. While it lacked the true luminance peaks of modern displays, it primed the global market for a world where “Standard Dynamic Range (SDR)” was no longer enough.

3. The “Nit” Wars: The Era of Standardized Brilliance (2014 – 2020)

The real revolution happened when we moved from capturing HDR to displaying it. This required a total overhaul of the video signal chain, moving from the old Gamma curve to PQ (Perceptual Quantizer).

- Dolby Vision & The Dynamic Shift: In 2014, Dolby revolutionized the industry by introducing Dynamic Metadata. Unlike static HDR10, which sets one brightness level for the whole movie, Dolby Vision allows the director to tune the image frame-by-frame. This required a massive leap in processing power—not just for the TV, but for the AVR sitting in the middle.

- The Format Fragmentation: We saw a “War of Standards”—HDR10 (the open baseline), HLG (the broadcast-friendly curve), and HDR10+ (the dynamic open alternative). Displays began to hit 1,000, 2,000, and even 4,000 nits. The challenge shifted from “How bright can we go?” to “How can we transmit this much data without losing the metadata?”

4. The Final Frontier: The AVR as the “Guardian of Integrity” (2020 – 2026)

We are now in the most complex era of HDR history. As we push toward 4K/120Hz and 8K HDR, the sheer volume of data (up to 48Gbps) has exposed a “Silent Bottleneck” in the home theater: the traditional Audio Video Receiver (AVR).

- The Metadata Collapse: Many “HDR-compatible” AVRs suffer from micro-timing errors. If the dynamic metadata packet for a Dolby Vision frame arrives even a few milliseconds out of sync with the video clock, the TV defaults to a generic tone map. The result? A flat, dull image on a premium $5,000 OLED.

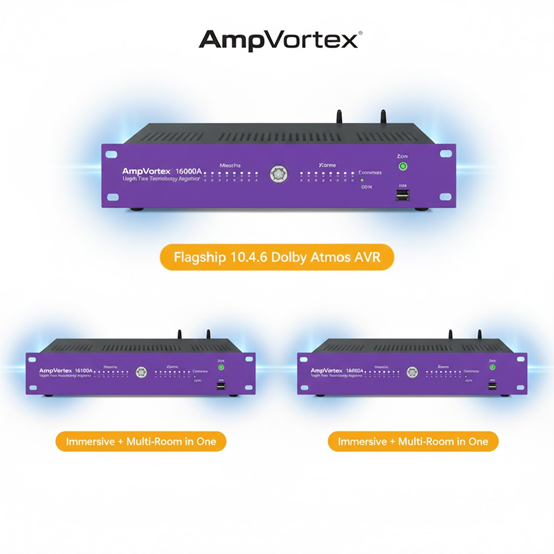

- The AmpVortex Solution: This is why we engineered the AmpVortex 16-Series differently. Most AVRs treat video as a passive “pass-through.” We treat it as a high-precision digital stream. By implementing Independent Clock Domain Isolation, we ensure that the heavy lifting of 16-channel immersive audio processing never interferes with the nanosecond-precision required for 8K HDR metadata.

Conclusion: Respecting the Journey of Light

The history of HDR is a journey of Preservation. From Le Gray’s darkroom to the AmpVortex 16200A, the goal has always been the same: to ensure that the light the director intended is exactly the light that reaches your eyes.

In 2026, the question is no longer “Is my TV HDR?” but “Is my system capable of delivering the truth?” When the AVR stops being the bottleneck, the 170-year dream of perfect light finally becomes a reality.